What is “Chatbot Therapy”?

Chatbot Therapy is AI-powered mental health care.

It generally serves as a substitute for in-person or tele-health style live counseling traditionally provided by another human – not just any human, but one who is educated, trained, licensed, and experienced in providing mental health care and counseling to individuals in need.

AI Chatbots can be programmed with a wide range of therapy models: cognitive behavioral therapy, dialectical therapy, and even mindfulness. The hundreds of Chatbot Therapy-like apps available all aim to stand in the widening gap of lack of mental health care providers in the U.S.

The three most popular apps that parents must be aware of: Youper, Wysa, and Woebot. Here are FIVE points all Brave Parents must know about these apps.

#1 Displacement of Relationships

No one can stop talking about how we don’t talk to each other. Parents recognize this and are unsettled by the isolation and disconnection of present childhood.

What no one will talk about (or at least do anything to change) is how technology is constantly displacing relationships. When we use social media, for example, we give up (to some extent) phone calls, face-to-face interactions, and cards. With so much engagement with technology, isolation and disconnection are the new norm.

AI chatbots take relationship displacement and isolation to a whole new level. Make the chatbot a therapist, counselor, mentor, or life-coach and the result is not only displacement but also deep confusion.

Woebot proudly boasts: “Woebot’s ability to form close relationships helps make our solutions highly engaging. In as little as 3 to 5 days Woebot has been shown to form a trusted bond with users.”

In Youper’s update of their app the developers say: Hello there! We’ve just rolled out an update for Youper mental health chatbot, improving the AI so your chats are even more engaging and feel super natural! Thank you to all our loyal users who’ve been with us on this journey. Your feedback, love, and patience make all the difference. Here’s to many more heart-to-heart chats!

The cure for the woes of adolescence and loneliness brought on by technology is not more technology. The fix for our isolation issues is not super engaging and trusted bonds with chatbots. Chatbot therapy works to only displace relationships even more. Frankly, the best therapy is to displace the technology with real life relationships.

#2 Anonymity

Many of these Chatbot Therapy apps tout the user’s experience within the app as safe, trusted, private, secure, and anonymous.

A few of these keywords are not about what kids need, but rather what they want. A distinction we, as parents, should recognize.

We should also recognize the innate human desire to hide information, sneak away, and keep the difficult things in the dark. We rarely change for the better when we do those things, yet this app encourages that natural tendency and boasts about its ability to do so!

What teenagers need can be some of those keywords, but what they also need is accountability, challenge, hope, admonishment, and encouragement in a face-to-face setting. They do not need more anonymity!

It’s too easy for these apps to turn into venting or woe-is-me victimhood sessions for teenagers. Even more, it’s easy for the teen to close the app once the chatbot responds with a preferred and tangible response. The real-life social norms and expectations that keep an adolescent in the chair or on the couch during counseling sessions are a helpful resource for any counselor to utilize. Anonymity makes it all too easy to walk away when it gets uncomfortable.

Additionally, it must be considered whether or not these apps will stay secure and anonymous.

#3 Empathy

Chatbot Therapy apps provide conversational therapy by claiming anthropomorphic attributes such as empathy, compassion, and experience. No matter how real a chatbot may seem, they are as only as good as the data they are trained on.

Learning empathy from a large language model (LLM) cannot and never will be the same as empathy learned from living as a human. In fact, studies have shown that technology is reducing our ability to empathize.

Should we allow our children to learn from chatbots especially when crucial character traits like empathy are on the line?

The qualities that chatbots promote like compassion, empathy, understanding, and the like are all emotions that coincide with body language and non-verbal cues. An AI chatbot cannot give body language nor can it discern a user’s body language. As a certified biblical counselor, I estimate at least 15% of my counseling session is observing and interpreting the non-verbal cues of my counselee.

The ability to ask questions like, “Why are you looking down”? or “How come you broke eye contact”? are only available in face-to-face sessions. Maybe you can get some of that through tele-therapy, but it is never as good as when you are in the same room.

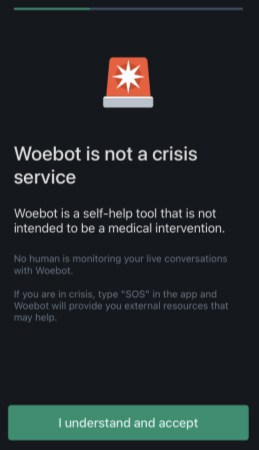

#4 Lack of Accountability or Crisis Help

Due to the nature of anonymity, there is a parallel lack of accountability.

In a typical counseling or psychological therapy scenario the parent of the child receiving therapy has some, albeit limited, interaction with the counselor. If there is a life-threatening, potentially harmful, or necessary concern to be addressed, the counselor-parent relationship allows for this dialogue. This collaborative care is necessary for accountability and is not available through Chatbot therapy.

Furthermore, due to the lack of FDA approval or regulation, there is a serious concern regarding crisis intervention. Certified counselors are mandatory reporters. If an adolescent is being abused, or if they share plans to harm themselves or end their life, the counselor is required to report this to authorities and parents, respectively. A Chatbot therapist cannot intervene for the adolescent’s safety.

Most of the Chatbot apps do state they are not crisis counselors, but this assumes that adolescents who use the apps can recognize whether they are in crisis or not. Because the apps boast that deep trust can be formed between the bot and the user, who is to say that a teen who wasn’t in crisis at the beginning doesn’t enter into crisis mode because they feel safe sharing trauma with the chatbot that they’ve never told another human? How does that teen get the immediate help they need? How will they know it’s time to move from chatbot to human counselor when their only established trusting relationship is with a computer?

The fact that the apps promote “AI powered Cognitive Behavioral Therapy (CBT)” while simultaneously claiming they do not provide “any form of medical care, medical opinion, diagnosis or treatment,” should raise red flags for everyone – especially parents.

#5 App Ratings and Review

App Store: 12+ (All three apps mentioned)

Google Play: E for Everyone (All three apps mentioned)

Brave Parenting: Do NOT recommend for either adults or children

There are too many concerns with these apps for children and adults. When people suffer and naturally pull away from others the last place they should turn is their phone to engage with an AI chatbot. This is not healthy for anyone.

Chatbots’ intentional design is to play the role of a human. They wear a disguise of anthropomorphic features which ultimately leads to confusion. It is not a good practice (or even ethical!) to confuse and confound someone who is already in a vulnerable state while they receive therapy.

Not only that but a child whose brain is still developing and highly impressionable will find it hard to distinguish between good advice or bad advice from the chatbot. And without the oversight or guidance of a parent, this could lead to devastating consequences.

For this reason (and many others), we recommend that ALL APP DOWNLOADS must be approved by a parent. There is far too much available through the app stores that destroy children for a parent to resolve, “Not my kid.”

Biblical Consideration

Counseling is intrinsically theological. Even the atheist counselor or counselee has thoughts about God. They believe He doesn’t exist but that’s still a thought about Him.

Since it’s intrinsically theological then the best place to go is the Bible. It provides the clearest understanding of reality and it’s an unchanging source of information. Even as a biblical counselor, I have a library shelf of secular counseling material but in 10-15 years all that material will change because it will become outdated and superseded by new research. That constraint, thankfully, does not apply to the Bible which means I can rely on it to sufficiently convict a counselee’s heart, to teach and admonish, and to give hope.

We also must remember a Chatbot Therapist cannot hold a counselee accountable or offer support. Another key feature of biblical counseling is that it happens in the context of community which is of the body of Christ. If a teenager is struggling with depression the counselor is not alone in walking with this teenager. His or her parents can come in for counseling, family counseling can be arranged, mentoring from youth leaders, or even mentoring from an older couple for the parents can be arranged. Suffering should not happen alone in the church. We are called to “bear one another’s burdens and fulfill the law of Christ” (Gal. 6:2). The body of Christ can support the acute physical, emotional, and spiritual needs of the moment for the glory of God & the love of others.

AI Chatbots are a lone-ranger counseling technique that will alienate the counselee from a community like their family or friends, which can ultimately lead to a greater degree of harm for the counselee.