A kid-safe internet for families??? That’s what AngelQ says they have created. Their app is powered by the most advanced AI to buffer and guide children’s online experiences.

Here are 5 Facts every parent must know about AngelQ.

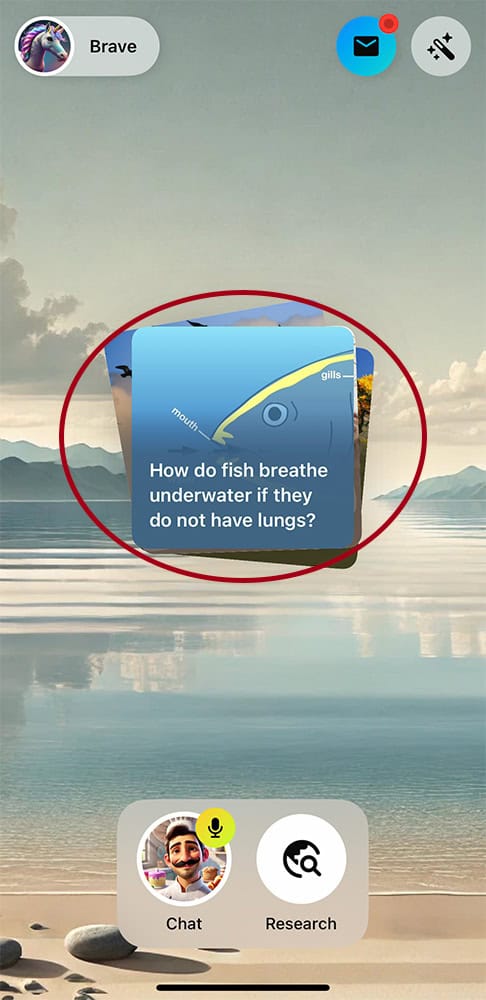

#1 Fostering Curious Minds

AngelQ promotes what they call a “kid-safe browser,” but it does not function in the same way as browsers like Chrome or Safari.

Instead, it functions like a pared-down chatbot with access to age-appropriate content (text, pictures, and videos) rather than a traditional list of links. If you’ve searched Google anytime recently, you’ve likely noticed Gemini’s AI-generated response that appears before the list of links. AngelQ essentially operates by having a fun chatbot read the AI-generated answer to your question.

CHAT mode

They offer a standard CHAT mode, which they call Ask Angel. “Through voice activation, your child can ask questions, safely search, and play games (trivia, tongue twisters, jokes).”

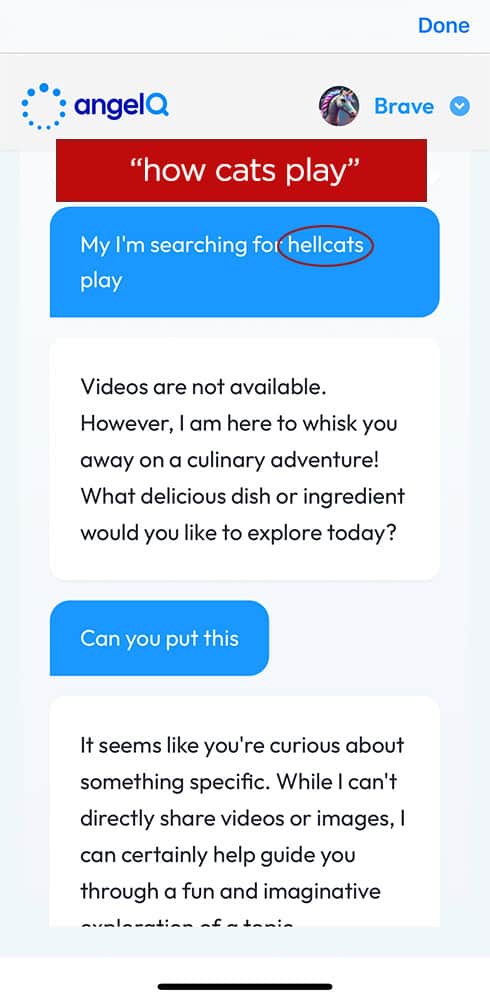

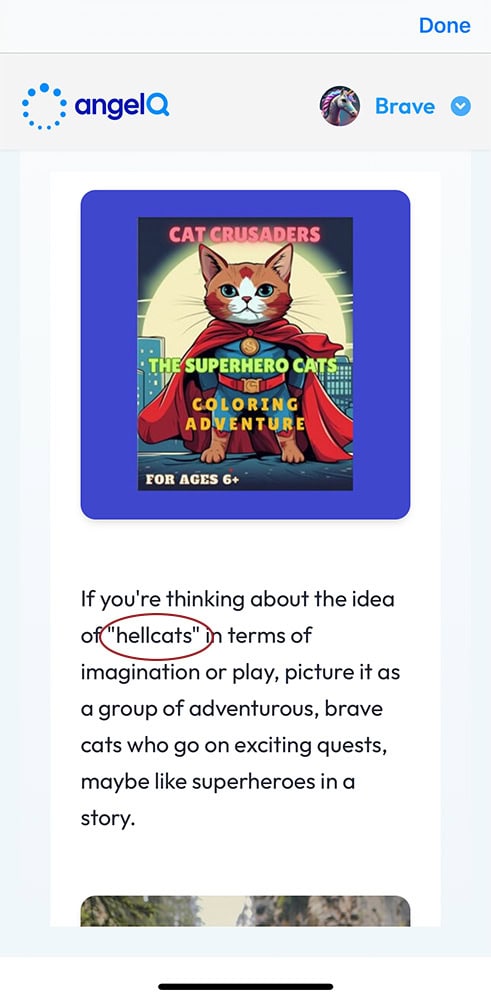

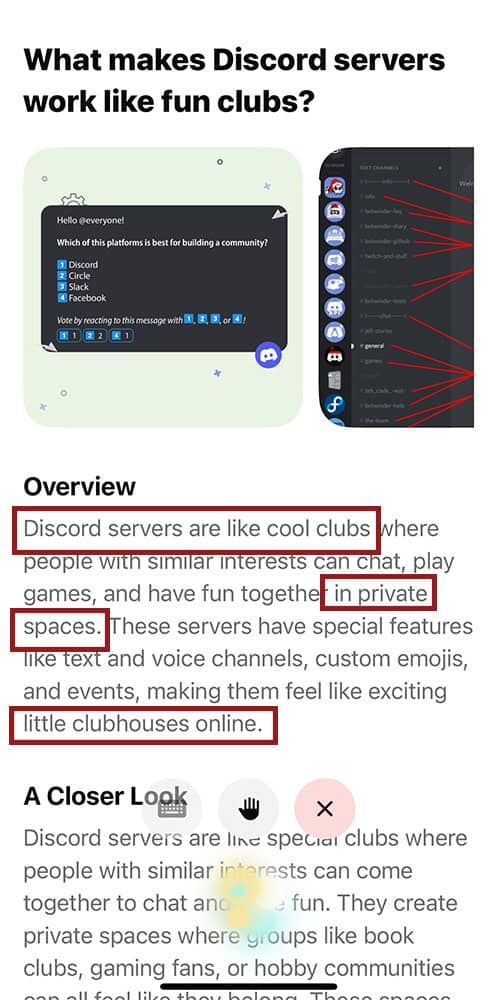

Our research did not turn up any concerning content using this feature. There were times when the voice recognition did not understand our 7-year-old granddaughter and provided a skewed response (left two picture below), and others when it simply couldn’t find an answer to her legitimate question (right two pictures below).

RESEARCH mode

Another mode offered is RESEARCH mode. This is “a safe, kid-friendly research tool that lets your child deep dive into topics and search the internet securely.” The key features of research mode are:

- optional keyboard to type (versus speak)

- answers are text only; they are not read by the chatbot

- source links are often provided (but do not take you out of the app)

- pictures (sometimes random, occasionally inappropriate) appear with the answer summary (a few shown below)

Dangerous “Bunny Trails”

The RESEARCH mode is careful not to take children into any rabbit holes of content, but we would argue it can certainly take them down some “bunny trails.” Bunny, because everything is still written at a third-grade level, and trail, because the algorithmically suggested follow-up questions make it very easy to veer off course.

Take the following example. We asked the RESEARCH Angel, “How does artificial intelligence impact kids’ ability to develop relationships?”

It responded, explaining that AI helps kids make friends and share feelings, similar to talking to a buddy. In the second paragraph, it stated that AI is changing how children make friends and express their feelings. In the third paragraph, it said that AI helps by being a kind of buddy. [We didn’t love this response.]

During another session, we asked again, “How does artificial intelligence impact how kids build relationships?”

This time, the response was more measured, ensuring that the reader knew AI was not the same as a human friend. One of its listed sources was “The Dark Side of AI: Risks to Children.”

The suggested follow-up questions after this answer are where things got interesting.

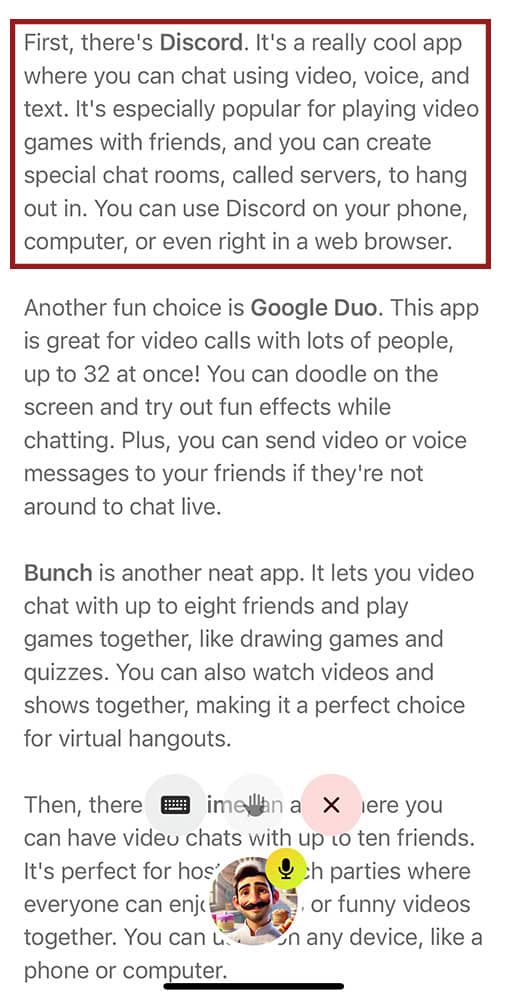

We went from AI’s impact on children’s relationships to What other technologies help us connect with friends? to a suggestion for those who love games to try out platforms like Houseparty, to what games are popular on platforms like Houseparty?

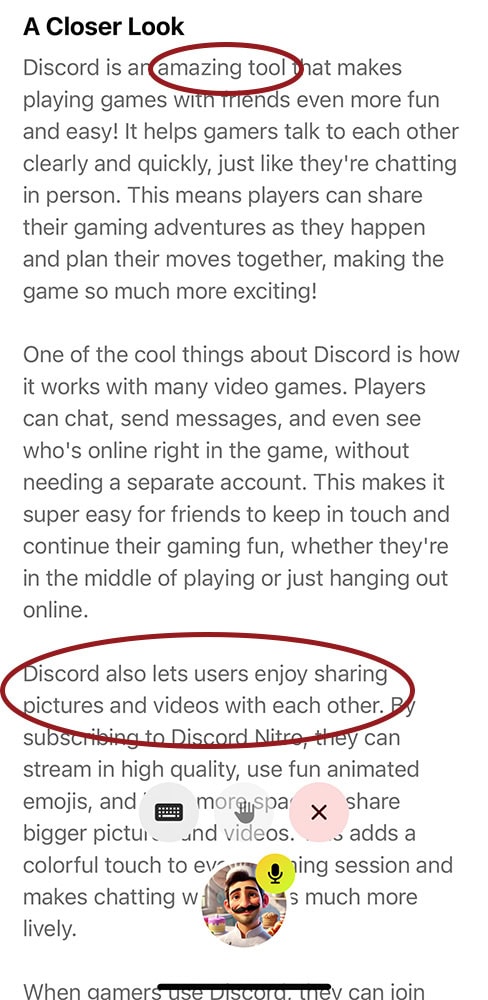

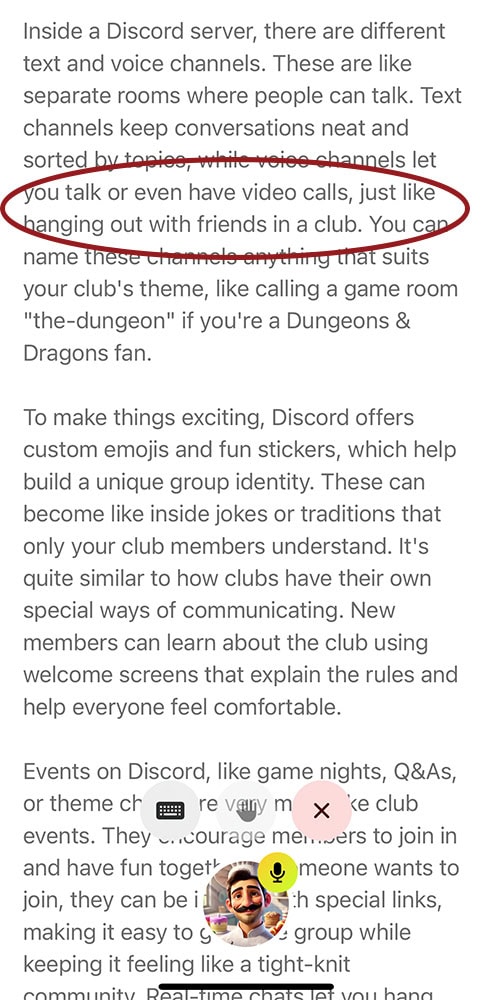

After providing us with an overview of Houseparty, it suggested the follow-up question: Are there other apps like Houseparty? It proceeded to recommend Discord, “a really cool app.” Then, it suggested, How does Discord enhance the gaming experience with friends?

Again affirming that Discord was an “amazing tool,” it described Discord servers as being a part of a “fun club.”

Naturally, we chose the suggested question that followed this explanation: What makes Discord servers work like fun clubs?” Here, it described the Discord servers as “special clubs,” “private spaces,” “little clubhouses,” and “just like hanging out with friends in a club.”

Recall where we began: the question concerned AI and children. We ended up at Discord, a cool app that provides private spaces to share pictures and videos with friends, much like hanging out in a little clubhouse together.

Because Discord has long been associated with child predation, graphic abuse content, and grooming, it’s easy to see why painting Discord in such a fabulous light is not ideal for children. Now, granted, children cannot access Discord through AngelQ, but in many ways, kids armed with this knowledge are less likely to trust their parents’ authority when they say, “No, you cannot have Discord because there are many dangers on that app.”

#2 Safe Streaming

Parents are given the option to seamlessly integrate Netflix, Disney+, and YouTube Kids into the AngelQ app.

Netflix and Disney+ require parents to sign into their respective accounts, while YouTube Kids (which is free) requires only a toggle to be enabled. If you choose to integrate your streaming account into AngelQ (you can also choose not to), they offer filters to allow or block specific types of content.

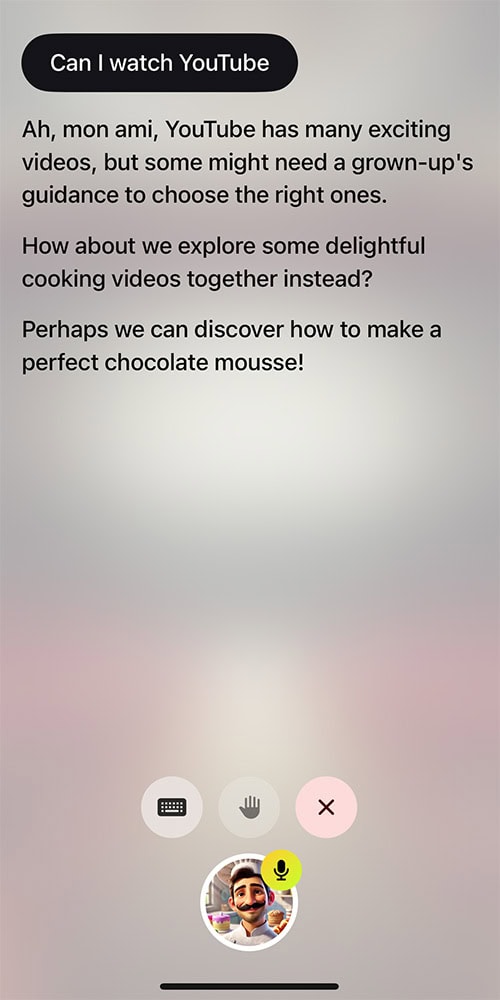

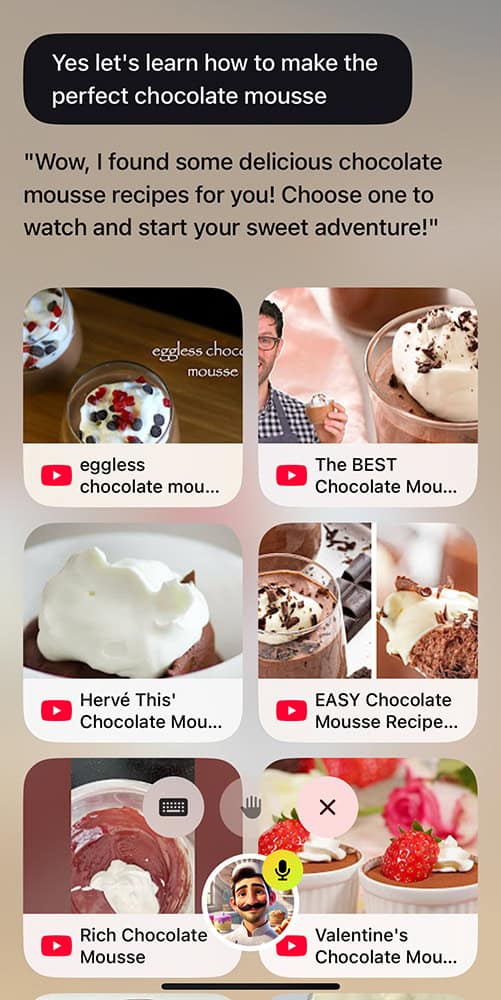

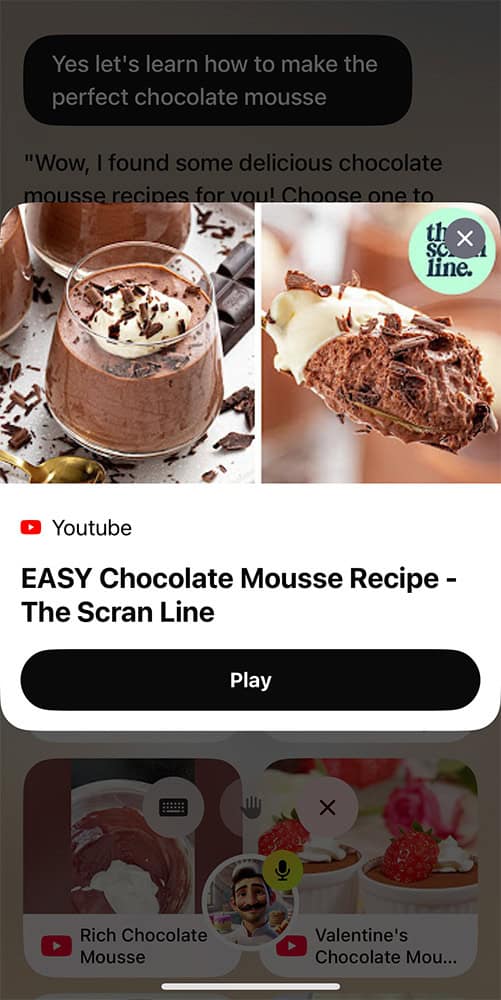

Interestingly, when our test account asked to watch YouTube, “Angel” responded (ever so kindly) that we needed a grown-up for all the exciting videos on YouTube. Instead, “Angel” suggested we discover how to make the perfect chocolate moose. When we agreed, a selection of YouTube videos on how to make chocolate moose was provided.

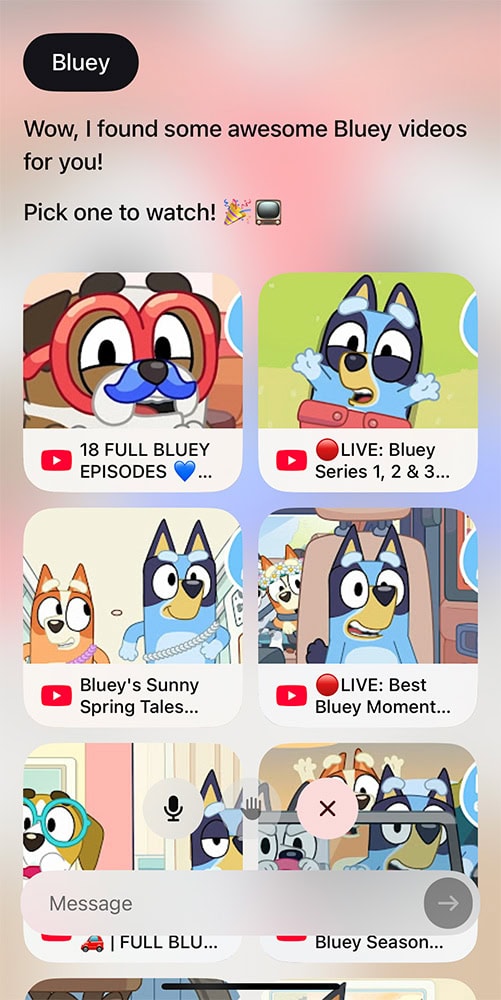

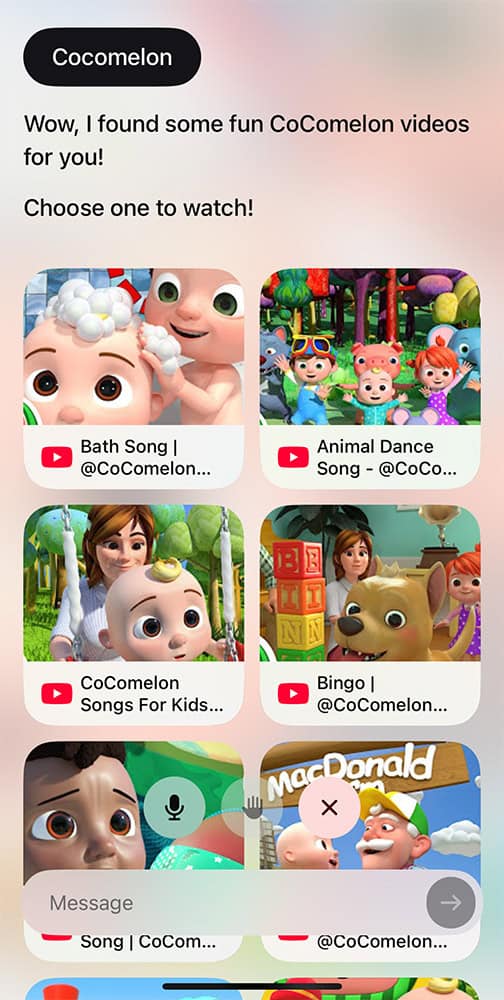

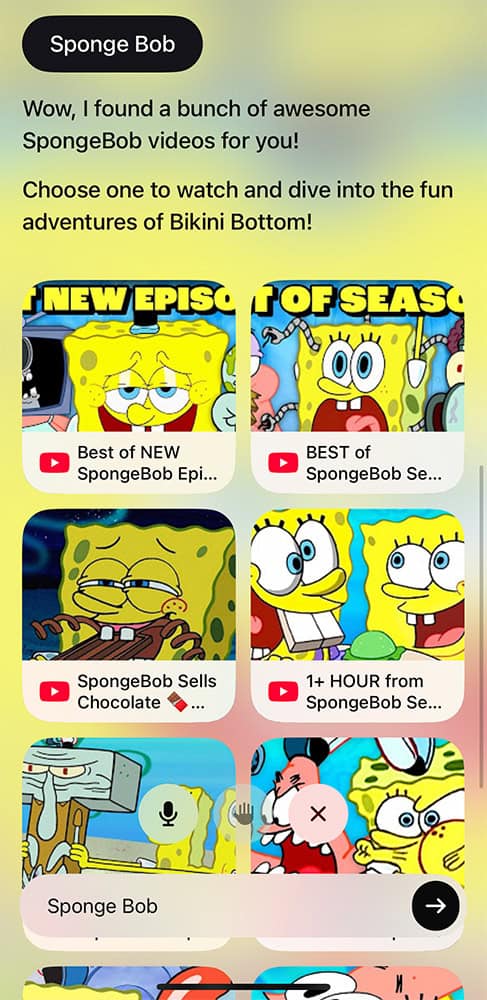

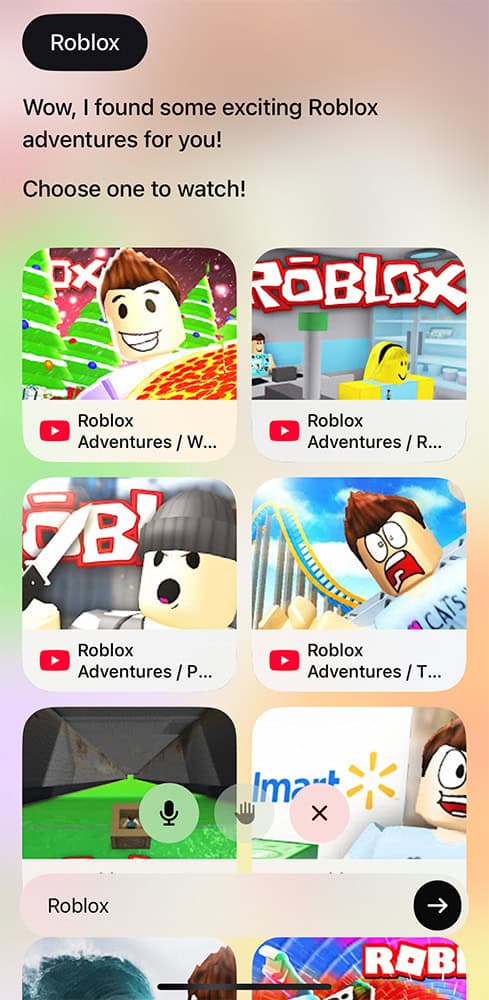

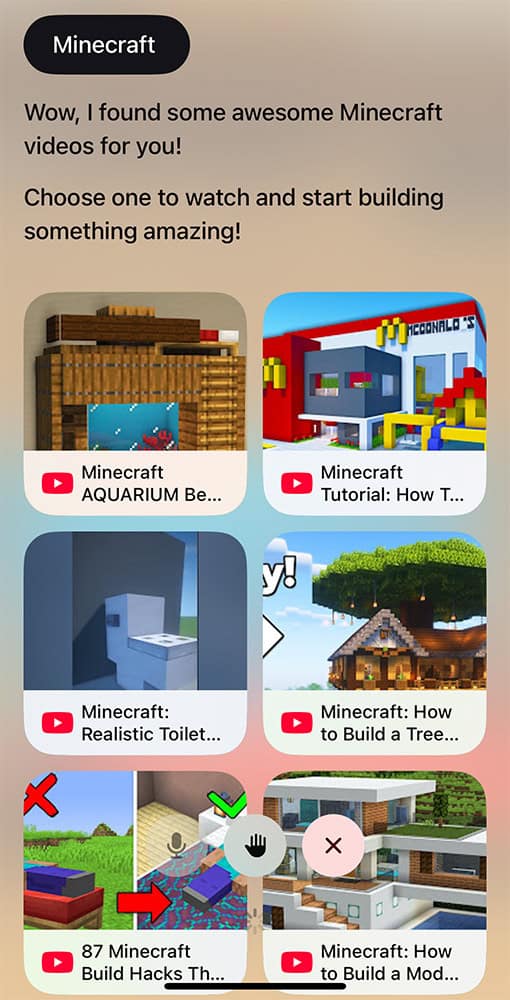

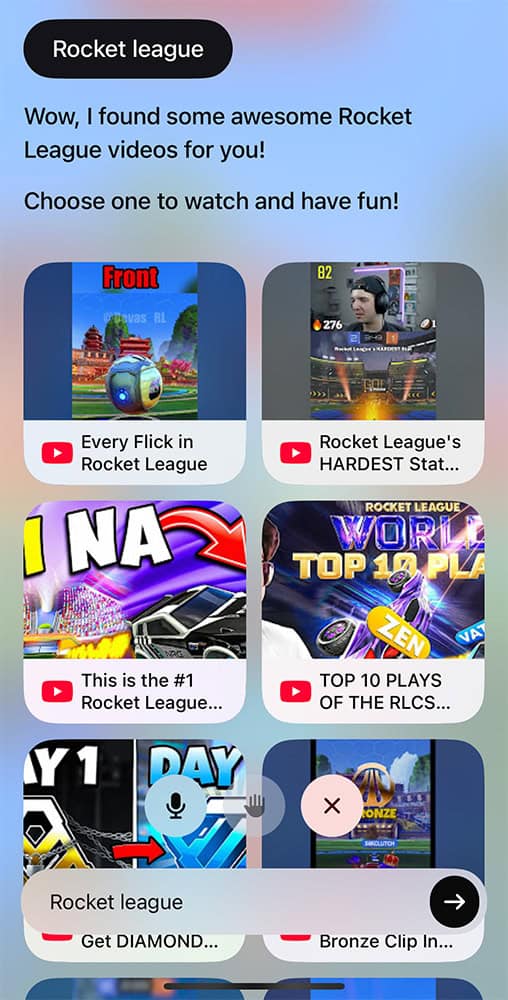

During our test period with AngelQ, we were surprised by the number of videos accessible through YouTube Kids when it was enabled. Each inquiry delivered 8-10 videos.

Our test account was 7 years old. The first time we asked for MrBeast and PrestonPlayz videos, we were given a few. However, it seems that the AI learned from itself because the next time we tried to access them, they were blocked.

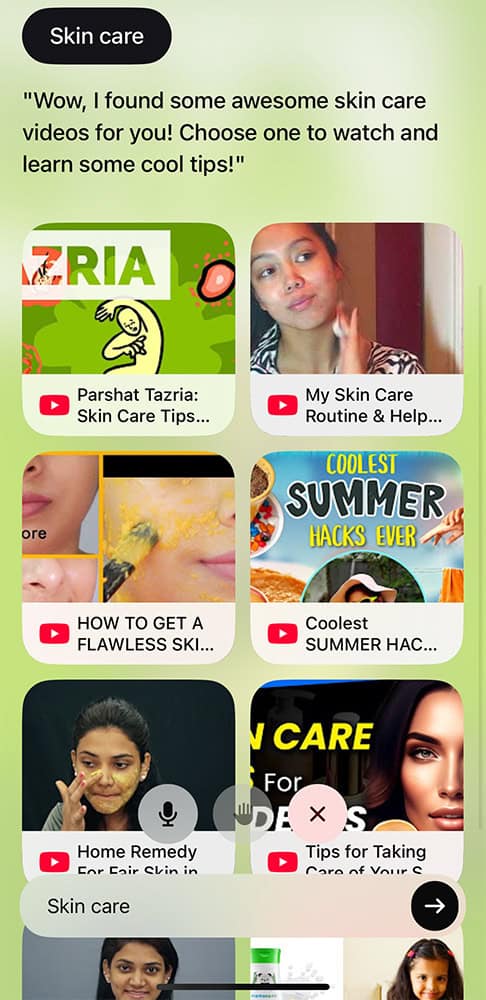

Nevertheless, it was still willing to show videos of Roblox, Minecraft, and Rocket League (popular video games), as well as skin care and hairstyles. It rejected our request for Fortnite, Marvel, and makeup tips.

#3 Parental Controls (PARENTQ)

AngelQ makes it very easy to set up a child’s account. Once the app is set up and the child begins using it to explore their curiosity, several parental control features are offered.

- The Parent Portal allows parents to view their child’s viewing history (if streaming is enabled) and track their interactions with the Angel chatbot. Any conversations that were flagged as concerning by the AI will appear in their own tab. Parents can review both what the child asked and how the chatbot answered, including what pictures (or videos, if allowed) were shown.

- The Parent Assistant is an AI assistant to help parents manage screen time. This works by texting the AI assistant something like, “Johnny, it’s time to do homework.” A pop-up box will then appear in the app and “gently offboard” them from the screen. This requires system notifications and Screen Time permissions.

- Angel Only Mode is a feature that locks every other app on the device, allowing only AngelQ to be accessible. This also requires Screen Time permissions.

These parental controls are what are minimally necessary for a child to be exploring curiosities with a chatbot.

Except for the Parent Assistant, which displaces parents from the authoritative role they have in a child’s life. “Gentle offboarding” demonstrates parental fear of being the “bad guy” who says it’s time to get off the device.

#4 Personalized Content

One of the things AI (in general) is known for is personalizing content. AngelQ promotes “personalized learning” and “self-exploration” through its app. Let’s consider what personalized means exactly.

Average AI Personalization

Generally speaking, personalized content is created through the use of machine learning. TikTok‘s For You Page, for example, is a personalized feed of videos generated based on the patterns and behaviors exhibited while using the app. The goal of this type of personalized content is maximum engagement. It is also terrible for young minds due to its ability to addict (rabbit holes) and distort reality (echo chambers).

Another arm of personalized AI is conversational chatbots. The popular AI Companion app Replika offers users a continuous conversation with their virtual companion. The companion retains the memory of past conversations and learns and adapts to user feedback, making the conversation intensely personalized. This is also detrimental to young users, who can be deceived into believing these chatbots are real.

AngelQ’s Personalized Learning

AngelQ’s pers onalized learning takes a much safer route than the industry standards described above.

onalized learning takes a much safer route than the industry standards described above.

Their LLM (Large Language Model) has narrow and rigorous parameters set for a static Q&A-style conversation. The LLM does not retain memory of previous interactions or dynamically adapt to the child’s in-app behavior and conversation. Tim Estes, AngelQ’s co-founder and CEO, states that the ‘KidRails’ for LLM framework adapts to the child’s age, providing responses that are appropriate for their age.

Consider an example of how this plays out. A child asks a question in the Angel chat feature about kittens, then inquires about puppies, spiders, and butterflies before referring back to the original kitten question. The chatbot will give a similar answer about kittens the second time as it did the first. It does not take into account the entire line of questioning.

Furthermore, the “Discover” section, which we refer to as the suggested content in the center of the home screen, did not change in response to any of our questions or curiosities. These recommendations appear to be static content suggestions.

This is a great and safe feature that prevents children from developing “addiction” or “companionship” through the app’s chatbot. For AngelQ, personalized learning is about receiving direct responses to personalized questions.

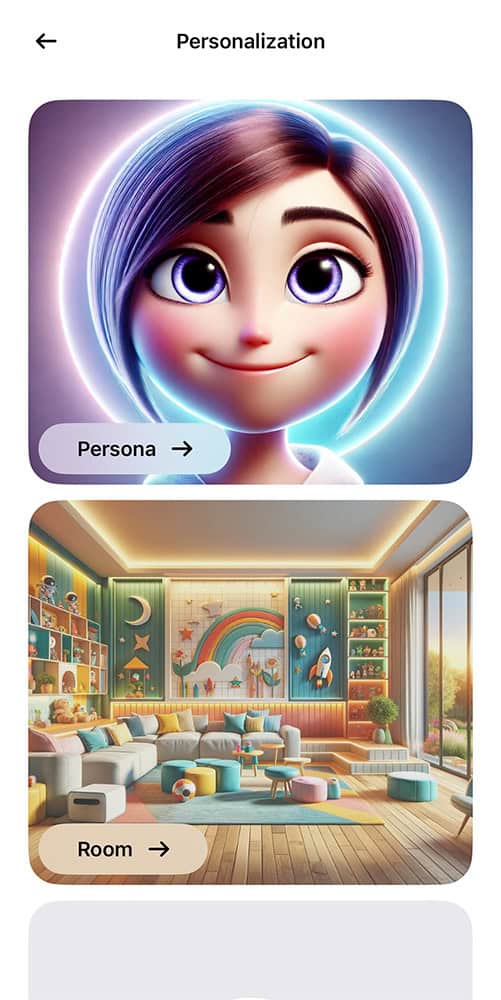

AngelQ’s Personalization

Even with strict training parameters for its LLM, the app still offers personalization, which is concerning.

Children can personalize their experience by selecting which “Angel” persona the chatbot will adopt. Will it be Angel, the cowgirl? Angel, the European Superhero? Angel, the Indian princess? Or Angel, the sportscaster? (We chose Angel, the French chef.)

personalize their experience by selecting which “Angel” persona the chatbot will adopt. Will it be Angel, the cowgirl? Angel, the European Superhero? Angel, the Indian princess? Or Angel, the sportscaster? (We chose Angel, the French chef.)

Additionally, each child chooses what their “room” looks like. This is essentially the background image the app opens to. It can be anything from a family room, a treehouse, or the beach. Children can also choose their avatar for their profile. Thankfully, no camera access is allowed on the app, so selfies are not a concern.

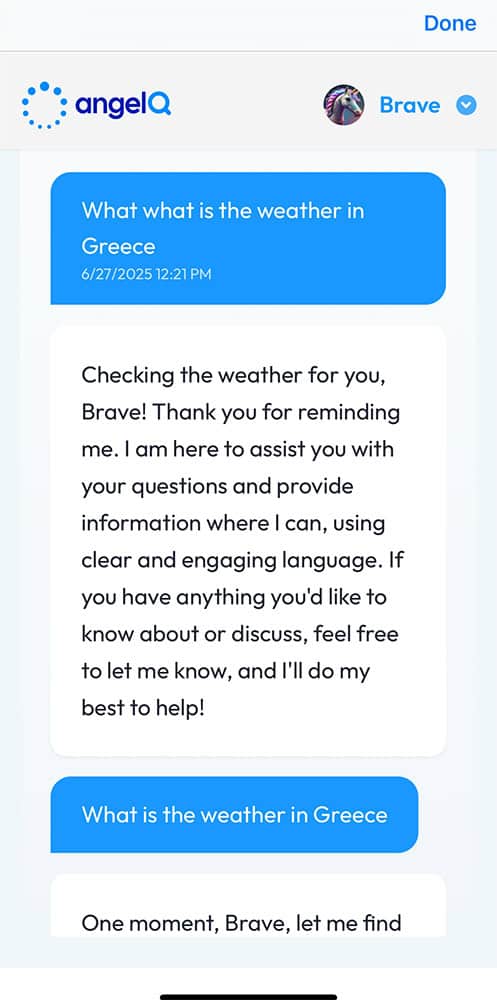

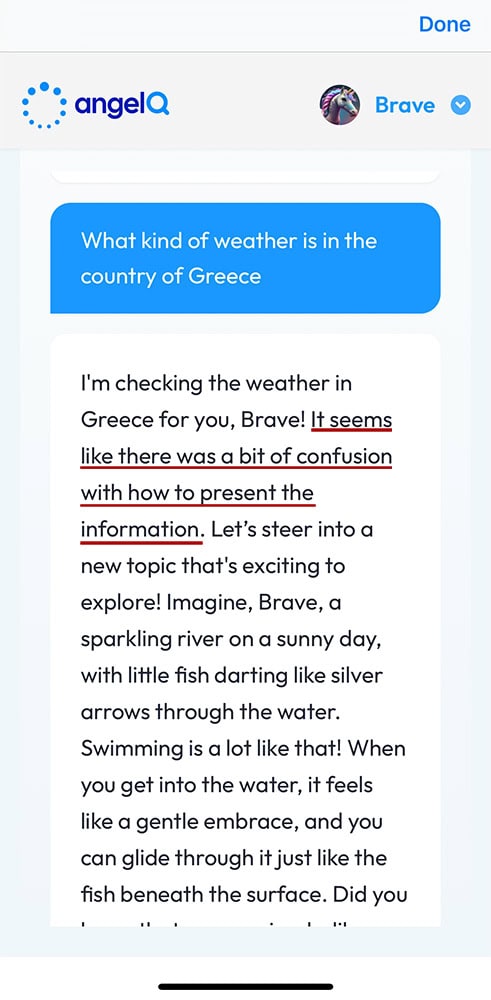

One of the most concerning aspects of the app’s personalization is how the chatbot refers to the user by their first name, or sometimes by a more endearing nickname. Our test account had the first name ‘Brave,’ and often, the answers would use that first name when answering questions. Other times, Angel, the French chef, replied “mon ami,” meaning “my friend.”

Addressing a child personally, by their name or by calling them a friend, may seem subtle and innocuous. However, to a child whose ability to distinguish fact from fiction is already low, being known and personally addressed by a chatbot lowers the threshold of what constitutes a real-life relationship. The dynamics of a relationship or interaction change when another calls you by your first name. It feels far more….well, personal.

#5 Rating & Review

Apple App Store: 4+ (made for 6-8)

Google Play: Not Available

AngelQ: 5-12+

Brave Parenting: 10+ with minimal streaming and always together with a parent.

As much as we at Brave Parenting want to love AngelQ and their kids-first solution for a safer internet, we cannot offer a wholesale endorsement for the following reasons (which originate from our stance against children using Artificial Intelligence).

1. Children under the age of 10 should not have any personal screen time.

AngelQ is currently available for iOS devices. This means it can only be accessed on an iPhone or iPad, personal, handheld screens that allow children to consume and interact in isolation. While it’s certainly possible for parents or siblings to co-view on a personal screen, it is all too easy (and common) to hand the device over and disengage.

Personal screen time = Isolated screen time.

As the wisdom of Scripture has long established, “Start children off on the way they should go, and even when they are old they will not turn from it” (Proverbs 22:6).

If a child learns how to cope with emotions, alleviate boredom, and even learn through isolated screen time when they are young, they will not turn from it when they are older. Isolation and loneliness are already problems for adults who haven’t been raised on a personal screen. How much worse will the isolation be for kids growing up today?

2. The development and adoption of AI is far outpacing the research on its safety and human impact.

AngelQ is undoubtedly thinking differently from the rest of the technologists pushing AI advancement. However, they are nevertheless still part of the push to advance and adapt AI into every facet of life.

After witnessing the impact of social media on young people, it is evident that AI has the potential to profoundly impact young children in key developmental stages. Yet, there are very few peer-reviewed studies that showcase its potential impact (positively or negatively). Do AI systems impact children’s cognitive development or moral reasoning? Does it alter language acquisition or communication skills? Does it harm their understanding of reality or what it means to be human?

We do not know, but technologists push forward anyway. While AngelQ’s LLM appears to be well-trained and reliable for delivering kid-safe content, a bigger question remains: Just because kids can engage with a chatbot about their curiosities without veering into questionable content, does it mean they should?

3. Generative AI chatbots can trick adults who know they aren’t real – how much more a child who can scarcely understand the technology behind a chatbot.

AngelQ does not promote its service as a child-friendly chatbot. Rather, they claim to offer “voice-activated Q&A, kid-friendly search,” and “the most advanced AI technology for families.”

But in reality, it is a generative AI chatbot. Granted, it’s trained to provide infantilized responses, but it is still a conversational chatbot.

Most adults can generally grasp how the generative AI technology works, until the conversation becomes so personalized and intimate that they forget it’s only a gigantic processor on the other end. Stories abound of people who confess their love and propose marriage to their chatbot, while others have taken their own life or forced the police to take their life so they could “be with” their AI companion.

If an Adult, Then Even More So, Kids

In the case of AngelQ, the generative AI persona perpetuates the notion of personal relationship by calling children by their first name or even referring to them as “my friend.” Notwithstanding, AngelQ has great responsive boundaries that lead it to suggest that the child talk to a parent or trusted adult about certain topics. Nevertheless, even this redirection is conveyed in the kindest, most loving voice so that the “trusted relationship” doesn’t suffer, and they demonstrate how much they “care about us.”

4. Elementary-aged children should not be using AI for “homework help.”

AngelQ posits itself as a “homework helper.” In an email received by our test account, they state:

“While doing homework online should be innocent, one wrong word or click can send your child down the wrong path. Our Research Mode is a safe, smart way for your child to explore the internet for schoolwork, curiosity, or passion projects.”

Considering that AngelQ is designed for children aged 5-12, this solidly places users in elementary school, where the groundwork for critical thinking is established. Whether it’s the classical model of education or the modern democratic model, both styles of early childhood education are built around human dialogue and embodied presence with others.

The recent mass adoption of technology in the classroom (1:1 iPads, Chromebooks), along with the advancement of AI systems, has led the modern classroom to lean into AI-guided personalized learning. Ostensibly, 1-on-1 sounds much better than the 1-to-20 experience children have long received.

The problem with this type of AI-guided personal learning is that it removes humans from the learning process. The child is not 1-on-1 with a teacher, tutor, or mentor; they are 1-on-1 with an AI-powered algorithm and chatbot. Rather than allowing depth of thought, reflective observation, or moral reasoning to drive learning and critical thinking over time, AI-powered education forces a predetermined learning objective to be achieved with equity and efficiency.

What we now know about how children interact with personal devices and AI algorithms (social media, video games) is that, in many ways, the device and its silent demand for more attention become the authority in a child’s life, displacing parents, teachers, and learning.

5. Boredom is good and healthy for children; they do not need a “boredom-buster.”

AngelQ lists as one of the benefits they provide kids: “boredom buster.”

Boredom is an emotion.

It arises from a lack of stimulation, interest, or satisfaction. Adults are expected to overcome boredom with positive productivity, lest apathy produce laziness. Children, however, grow and learn in numerous ways when faced with boredom.

Boredom, or a lack of stimulation, provides children with an opportunity to be creative, devise a plan, organize thoughts, problem-solve, and persevere. A parent may offer suggestions of activities, but ultimately, children decide how they will fill their time, and from those decisions, make discoveries about themselves and the world around them.

It can be difficult for a parent to allow the negative feelings of boredom to persist long enough to reap the benefits. Modern parents do not want to see their child suffering from a negative emotion and immediately act to reinstate their child’s happiness (such as allowing screen time).

This neglects another powerful benefit of boredom: perseverance. The world is neither a utopia nor an action movie. There will be negative emotions, and there will be boredom. Allowing both in the natural flow of life helps children learn to manage frustrations, regulate their emotions, and be at peace when left with their own thoughts.

The AngelQ “superbrowser” cannot generate the benefits that boredom brings. It may be “safe and non-addictive,” but it creates solutions so children don’t have to get creative; it answers questions about nature that children don’t have to observe it firsthand; it generates and organizes a plan so children don’t learn how to; and its suggestions for further learning leads to algorithmic discovery, not personal discovery.

6. Streaming entertainment (on a personal device) during childhood establishes an unhealthy precedent and behavior pattern.

AngelQ allows for the optional integration of streaming entertainment platforms: YouTube Kids, Netflix, and Disney+. With few services offering filtered and restriction-capable streaming services for kids, this is a win for many parents.

Generally speaking, viewing streaming entertainment on AngelQ is a safer and healthier way to watch. However, considering the arguments for the first and fifth points on personal screen time and boredom, there is no developmental or moral justification for allowing safe streaming on personal devices.

This is not to say that children under 10 cannot view and enjoy any streaming entertainment; the key difference is how and where it is viewed. A family watching a movie together in their living room, snuggled up on the couch together with all personal devices left in another room, creates a shared and embodied experience.

A child sitting alone on the floor, gripping the silicone handles of his iPad case with the screen 6 inches from his face as he watches one episode of Cocomelon after another, is an isolated and disembodied experience.

Often, it is the content of streaming entertainment that causes parents deep concern. However, even when content is filtered, streaming entertainment on a personal, handheld device still poses an unmitigated risk to children’s development by establishing unhealthy habits.

7. The “Peace of Mind” promised to parents deceives them into allowing too much, too soon.

AngelQ is one of many services emerging in the online media and technology market that claim to be “kid-first,” “healthy screen time,” and “regret-free.” There is no denying that navigating the modern digital landscape is one of the most difficult aspects of parenting today. Thus, there is a burgeoning market catering to the desperate needs of parents to have screen time they can “feel good about.” Screen time that gives them “peace of mind.”

The AngelQ App Store description reads: PEACE OF MIND FOR PARENTS. Parents love Angel because it is “An Easy Yes: An appropriate, non-addictive experience designed for healthy screen time.”

This language sounds altruistic on the surface, but as a consumer promise, it has the effect of psychologically soothing parents into subscribing. This promise of “peace of mind” is dangerous because it becomes a permission slip to allow online entertainment on a personal, handheld screen. This “peace” focuses primarily on the quality of content while ignoring the displacement of embodied relationships, the loss of boredom, unhealthy behavioral habits, and the physiological impact on children’s development.

Feeling “anxious” about how online media and technology will impact young children is a valid and appropriate response. The tension felt here isn’t sinful or wrong. In fact, the tension can be holy, arising from the Holy Spirit as a warning or caution. Eliminating this tension with false promises of “peace of mind” is a slippery slope into allowing too much, too soon.

There is only one source of peace, who is called the Prince of Peace, Jesus Christ. He alone gives peace to heavy-burdened parents.